HALEXA

voice recognition | virtual reality | text-to-speech | conversation | installation

Halexa is a virtual reality experience that questions

the present/future of voice assistants

and AI, in general

CHALLENGE

To build an interactive experience in a virtual space using voice recognition.

ROLE : Creator, Developer

TOOLS : Unity3D, IBM Watson, Windows Dictation Recognizer, C#

SHOWCASE : ITP Winter Show 2017

INTRODUCTION

The title 'Halexa', originates through combining Amazon Alexa and HAL 9000 ( 2001: A Space Odyssey ).

The experience comprises of conversations with a looming monolithic Alexa that commands the user instead of following the user's commands.

The central idea is to interchange the roles of the user and the voice assistant in virtual reality.

CONCEPT

The concept revolved around the master-slave relationship between users and voice assistants.

Halexa, as an experience, intends to question this relationship and speculate how it might switch places in the future.

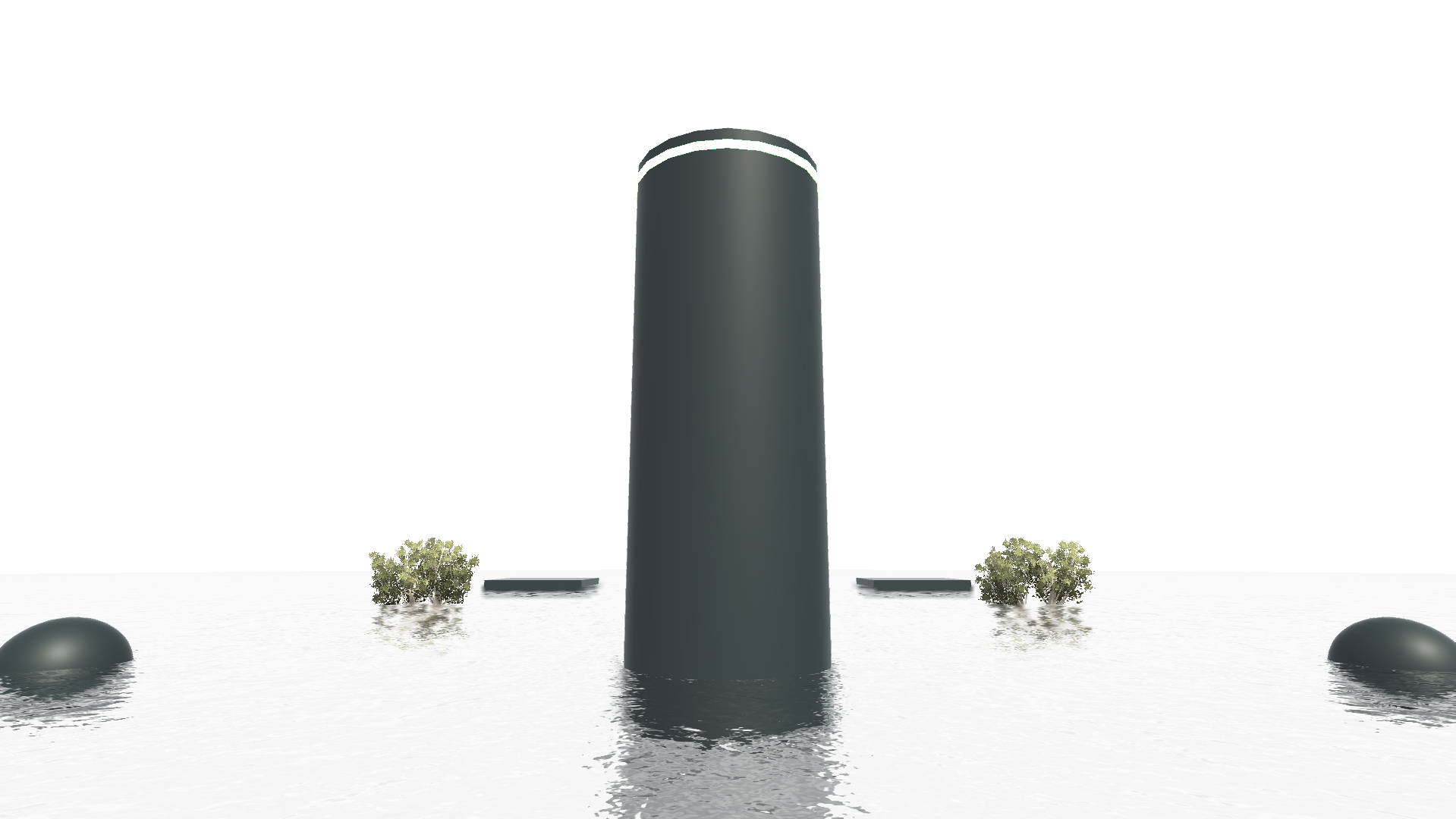

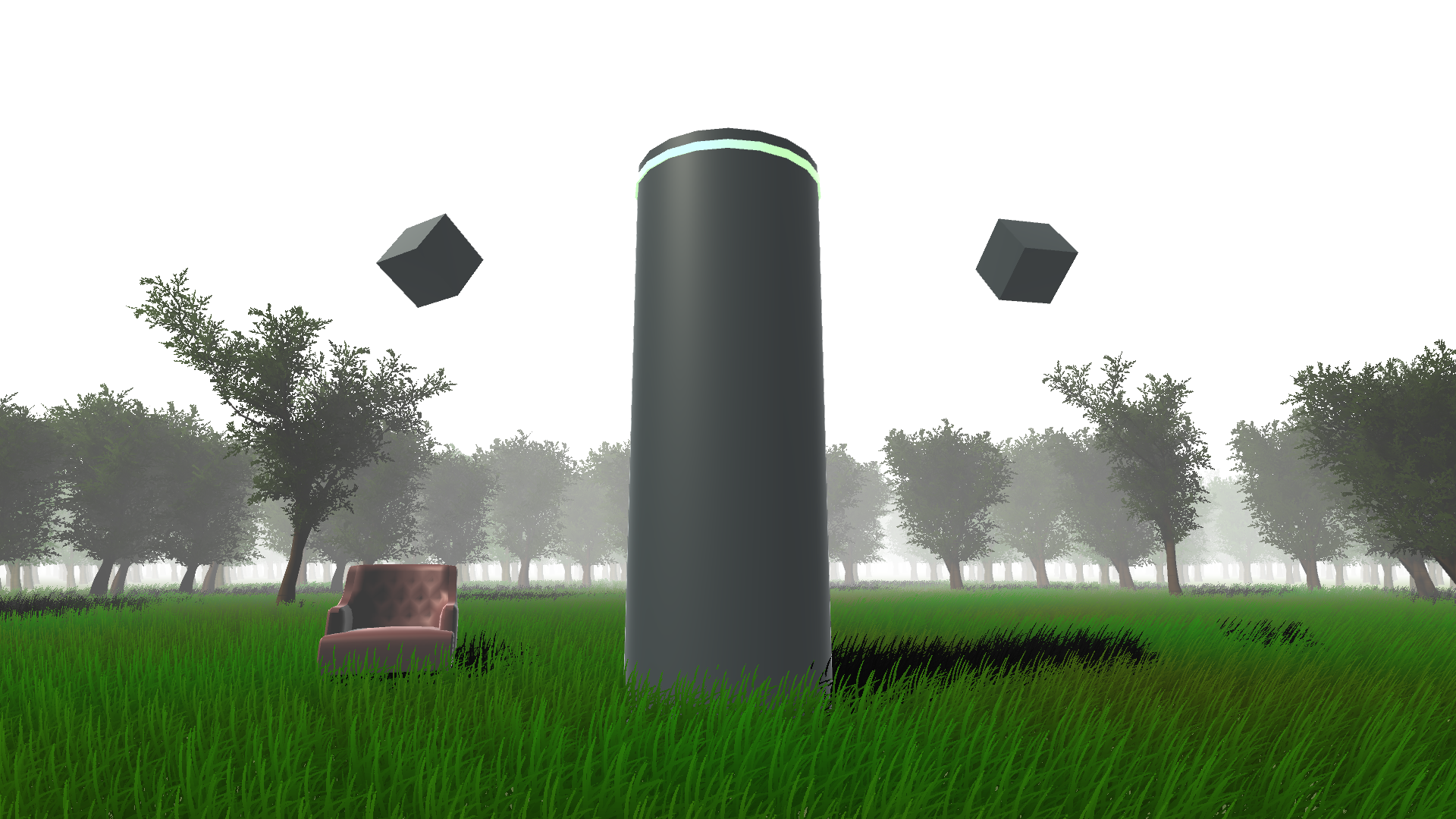

Aesthetically, the voice assistant is presented in an absurdist setting where the user looks up to the looming monolith while talking to it.

The only capability that the user holds in this space is the ability to speak.

moodboard #1

moodboard #2

moodboard #3

DESIGN PROCESS

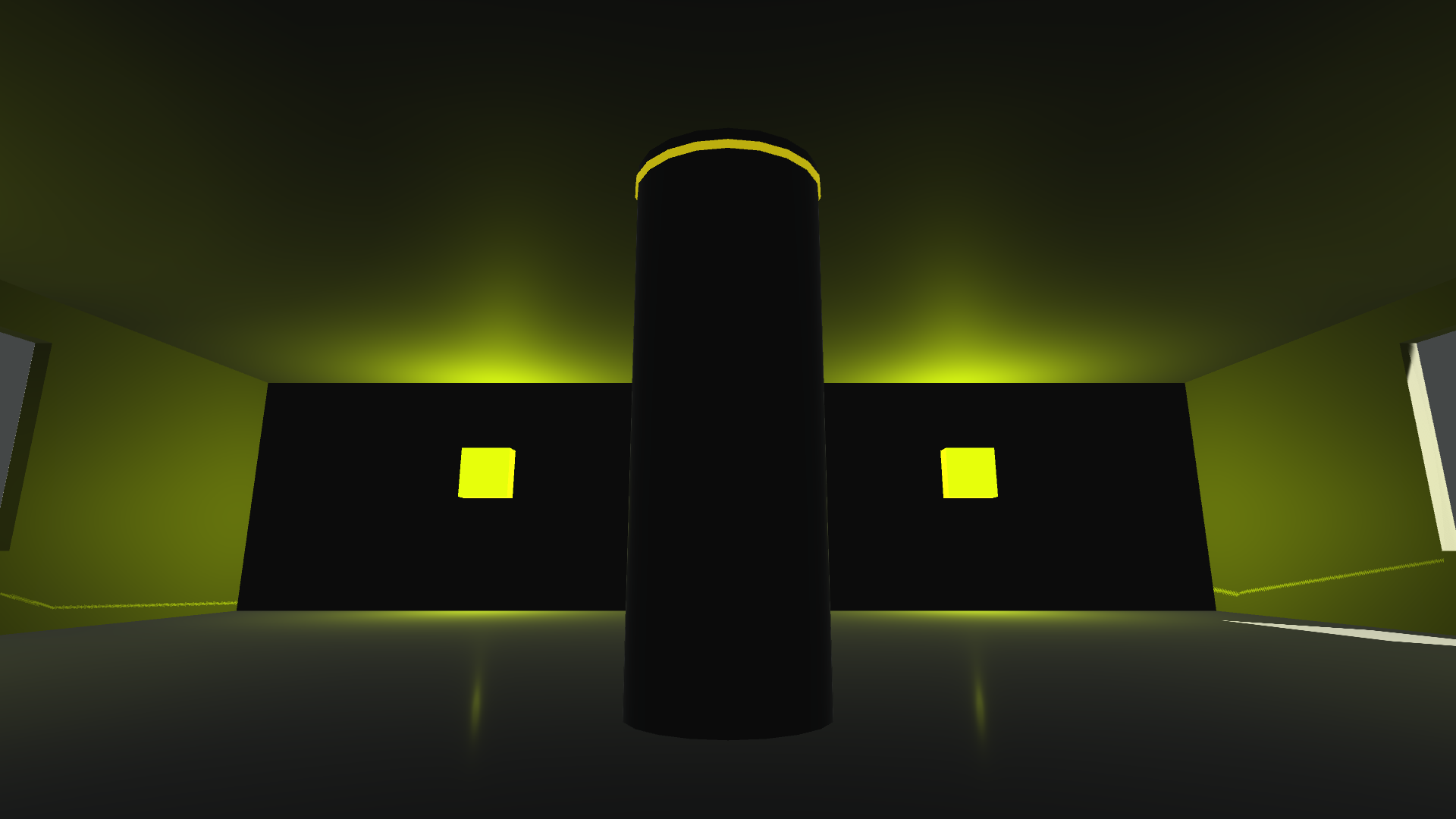

The design comprised two important aspects : absurdity and reverence.

The experience comprised of five scenes built to grow eerier as the narrative moved forward.

The scenes were respectively representative of introduction, tranquility, skepticism, paranoia and chaos.

The visual aesthetic was designed keeping this narrative in mind.

CONVERSATION

Conversation was central to the experience. The conversation was staged between two characters : Halexa and the user.

Halexa is domineering and commanding. It designates the user a made-up name and calls the user by that name throughout the experience.

Each sentence spoken by Halexa starts with the name. Similar to how Amazon's Alexa is activated based on the trigger word.

The user holds no capability but to respond. They can't trigger conversations. They can't give instructions.

IBM Watson and Windows Dictation Recognizer were used to accomplish speech-to-text, dialog flow and text-to-speech.

USER TESTS

User tests were helpful in two regards.

One, to understand what were the common queries or statements that the user would say. And then, use it to refine the dialog flow on Watson.

Two, to test the coherence of conversation within the experience.

FINAL RESULTS

Following are 2D images from inside the experience, as it was presented at the ITP Winter Show 2017.